Understanding how vMotion works

This is my first article on detailing VMWare Internals and in this article we discuss about how VMWare vMotion works and what happens in the background during the vMotion.

About vMotion

vMotion is one of the key feature of VMWare technology. vMotion is a live migration/movement of running Virtual Machines (VMs) between physical hosts with ZERO downtime and offering continuous service availability. It is transparent to the VM Guest OS & applications as well as to the end user. The primary use case is to support manual load balancing of your ESX servers in cases like, a host requires a hardware maintenance or is highly overloaded, then you can move the running VMs off of that host using vMotion. It avoids server downtime, allows administrators to troubleshoot physical host issues and provides flexibility of balancing VMs across the hosts. Many people misunderstand vMotion as HA but its essentially an invaluable tool for IT admins to achieve zero downtime load balancing of running VMs. Its is the key feature on which various other capabilities are built upon. They include the DRS (Distributed Resource Scheduler), DPM (Distributed Power Management), FT (Fault Tolerance).

Notes: Migration is the process of moving a virtual machine from one host or storage location to another. Copying a virtual machine creates a new virtual machine. It is not a form of migration. You cannot use vMotion to move virtual machines from one datacentre to another. Migration with Storage vMotion allows you to move a virtual machine’s storage without any interruption in the availability of the virtual machine.

Below is the architecture diagram of vMotion functionality:

Configuration Requirements for vMotion

- HOST Level

- Both hosts must be correctly licensed for vMotion.

- Both hosts must have access to VMs shared storage.

- Both hosts must have access to VMs shared network.

- VMs using raw disks for clustering purposes, can’t be migrated.

- VMs connected with Virtual Devices that are attached to Client computer, can’t be migrated.

- VMs connected with Virtual Devices that are NOT accessible by destination host, can’t be migrated.

- 10 GbE network connectivity between the physical hosts so that transfer can occur faster

- Transfer via Multi-NICs is supported, configure them all on one vSwitch

What needs to be Migrated?

Its explained that vMotion involves migrating/moving a running VM between physical hosts, lets get another step deep to understand what actually gets migrated. vMotion leverages the vSphere checkpoint state serialization infrastructure (referred as checkpoint infrastructure) to make the migration.

- Processor and Device state (CPU, Network, SVGA, etc.)

- Disk (use shared storage between source and destination host)

- Memory (Pre-Copy memory while VM is running)

- Network (Reverse ARP to the router to notify the host change)

Process of vMotion:

In order to migrate above listed components as part of vMotion, it involves below process:

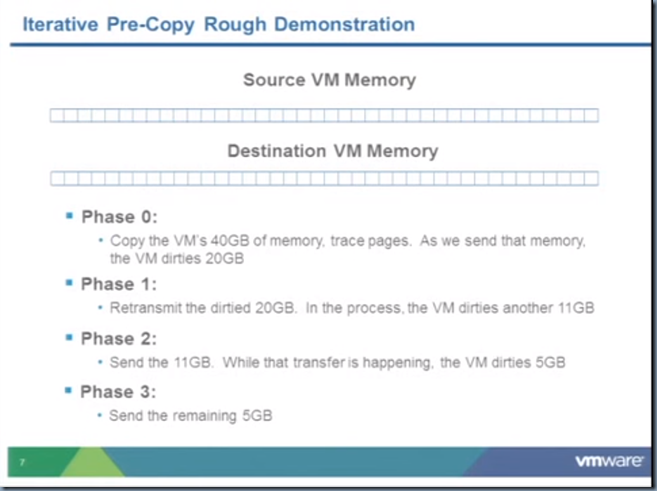

- Pre-Copy memory from Source to Destination host. Pre-Copy is achieved through Memory Iterative Pre-Copy which includes below stages:

- First Phase, ‘Trace Phase/HEAT Phase’

- Send the VM’s ‘cold’ pages (least changing memory contents) from source to destination.

- Trace all the VM’s memory.

- Performance impact: noticeable brief drop in throughput due to trace installation, generally proportional to memory size.

- Pass over memory again, sending pages modified since the previous phase.

- Trace each page as it is transmitted

- Performance impact: usually minimal on guest performance

- If pre-copy has converged, very few dirty pages remain

- VM is momentarily quiesced on source and resumed on destination

- Performance impact: increase of latency as the guest is stopped, duration less than a second

- Uses Stun During Page Send (SDPS) to ensure more active, large memory virtual machines that are successfully vMotioned from one host to another

- SDPS intentionally slows down the vCPUs to keep the virtual machine’s memory from being faster.This guarantees that the vMotion operation ultimately succeeds impacting the performance while vMotion is in progress.

References:

- ESXi and vCenter Server 5 Documentation > vSphere Virtual Machine Administration > Managing Virtual Machines > Migrating Virtual Machines

- VMware vMotion in VMware vSphere 5: Architecture, Performance & Best Practices (VSP2122)

- Virtual machine performance degrades while a vMotion is being performed (2007595)

- New features vMotion Hen of VMware vSphere 6.0

- VSP2122 VMware vMotion in VMware vSphere 5.0: Architecture, Performance and Best Practices

- vMotion – How it Works – in details….VMworld Video